What’s going on at SBS2? I’m rather enjoying some of the shows they are running there

, including the excellent ‘Orphan Black’, a smart sci-fi thriller. But some of the shows look very washed out. That includes ‘Orphan Black’, and also re-runs of ‘30 Rock’. Here’s a typical frame from the latter:

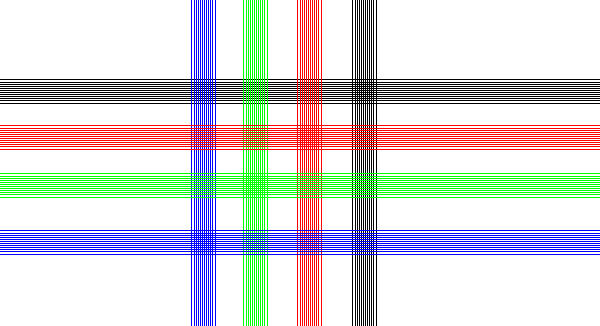

Blacks aren’t very dark. Whites aren’t very bright. The whole thing looks

kamagra pills

, as I said, Washed out.

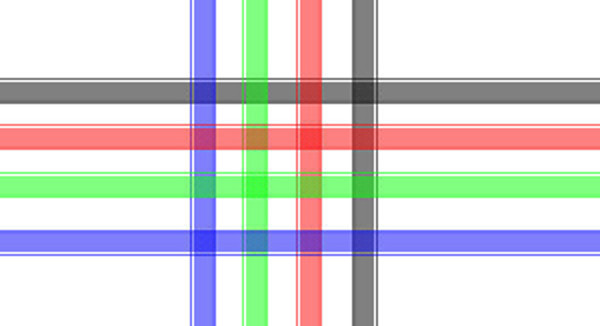

Here, by comparison, is a frame from an advertisement for another show that appeared on the same station a few minutes later:

Blacks are as black as your display will allow. Whites are as white, etc.

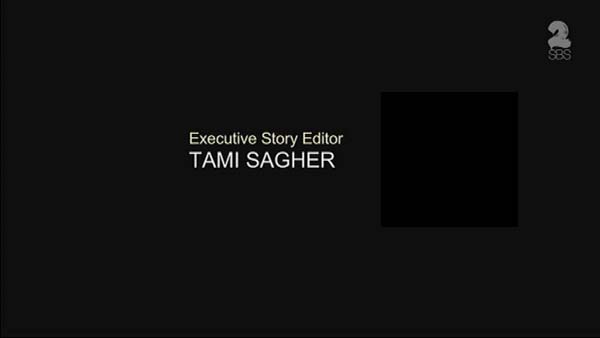

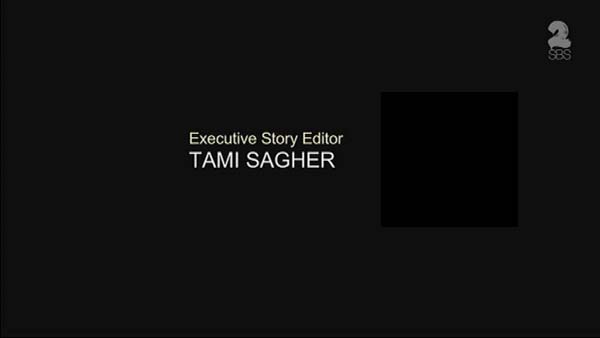

Just to make it clearer, here’s a frame from the closing credits of ‘30 Rock’. The background should be black. I’ve pasted a real full black box over the top:

On my large projection screen the washed out appearance seems quite a bit worse than it appears on a computer monitor. (On one of my computer’s monitors, I see now, the darker black is almost invisible. I need to recalibrate that monitor.)

Looking closer: on a scale of 0-255 — the eight bits with which each colour is encoded — the darkest value for ‘30 Rock’ is 15 for all three colours. On the same scale the brightest white is 242 for all three colours, or some 13 short of the maximum. By contrast (sorry) the other frame ranges from 0 for all three colours (bottom right of frame) to 255 for all three colours (the white in the ‘M’ box).

To my eyes the washed out appearance was reminiscent of a mismatch between RGB video modes. There are two somewhat different modes. As I mentioned, each colour is encoded using an 8 bit number. For computer style signals, a 0 for a particular colour is zero saturation (ie. black), while 255 is full blast. All three colours at 255 makes full white.

But for historical reasons, consumer video RGB the settings are different. For full black each colour is 16 on the 0 to 255 range, while full brightness is 235. Values of 0 to 15 can be carried as well, but they just show as black. Values of 236 to 255 show at full brightness (full white when all three are at least 235).

TVs are typically calibrated so that an RGB signal is stretched so that 15 becomes a 0 in appearance on the screen, and 235 looks like 255. But if the display thinks that the RGB is a computer style signal, it won’t stretch the range and the picture will look washed out.

The numbers for ‘30 Rock’ don’t quite match up (range 15-242 rather than 16-235), nonetheless my guess is that somewhere in the delivery chain the video has been accidentally treated as PC style.